Introduction

Introduction

This was a continuation of the survey conducted from the previous week with a resurvey of our data to better and more accurately represent the features. Through the process of resurveying, it was crucial to normalize our data in order to minimize data redundancy through the organization of attributes and tables to ultimately better represent the study area. After data normalization was complete, the terrain needed to be visually represented through ArcMap and ArcScene with the use of various data interpolation methods including inverse distance weighted interpolation (IDW), natural neighbors, kriging, spline, and triangular irregular network (TIN).

Methods

The first step was to import the xyz data into Excel which would be the table I would import into ArcMap through ArcCatalog. This table is used to create a feature class based upon the measurements taken in the field. In order to import the table, the Excel file needed to be normalized to create a proper format that ArcMap would use (Fig. 1).

|

Fig. 1: The normalized excel table with the x, y, and z data that would in turn be uploaded to ArcMap

|

|

| Fig. 2: This is the feature class that was created through the use of an excel file. This shows how each point is evenly distributed along an x and y axis, but it is also important to note that each point also contains a z (elevation) value |

The next step was to use the various interpolation techniques to visually represent the surveyed terrain and ultimately decide the model that best represents the collected data.

Deterministic Interpolation Methods:

These methods calculate the smoothness of a surface based directly on the surrounding values or mathematical formulas.

IDW:

Inverse distance weighted interpolation determines cell values using a weighted combination of sample points. This method more or less takes the values of nearby sample cells and creates an average of those values to for a given cell. With this method, the values near the center of a cell have more influence than the values in the peripheral of a cell.

|

| Fig. 3: IDW Interpolation |

Natural Neighbors:

Natural neighbors interpolation utilizes an algorithm that determines the closest set of sample points to a point of interest and applies weights to the closest points based on the proportion of the area to then calculate an output value. This method does not create trends and does not produce peaks, pits, valleys, etc. that are not already represented in the input data. This method provides a smooth surface everywhere except at locations of the input data. This method is known to work well with regularly and irregularly distributed data.

|

| Fig. 4: Natural Neighbors Interpolation |

Spline:

Spline estimates values using mathematical functions to reduce the overall curvature of the surface which results in a smooth surface that passes through all data points.

|

| Fig. 5: Spline Interpolation |

Geostatistical Interpolation Methods:

They use statistical models to find relationships between data points. These techniques can create a prediction surface as well as provide a measure of the accuracy of the predicted surface.

Kriging:

Kriging explains the spatial variation and statistical relationships among measured points based on statistical models. It assumes that the distance or direction between sample points reflects a spatial correlation can help explain surface variations and it uses a mathematical function to fit all points within a specific location which helps insure accuracy of the created surface.

|

| Fig. 6: Kriging Interpolation |

TIN:

A TIN is a vector-based digital means to represent surface morphology. They are constructed using a triangular set of vertices which are connected by edges to form a network of non-overlapping triangles to form a continuous surface. TINs work well with steep features such as ridgelines where the TIN tends to produce a higher resolution.

|

| Fig. 7: TIN |

These different methods each modeled the terrain in a unique way, some good and some bad.

Discussion:

Many interpolation methods were learned through this assignment and as with everything, each one has its pros and cons. The Kriging appeared to be the least accurate representation of our landscape. This model appeared to make too many generalizations of the landscape and seemed to oversimplify the terrain. It cut down drastically on the hills and ridges and at the same time extended the valley quite a bit.

The IDW interpolation also did not give a desired result. The surface appeared blotchy and spiky, very unrealistic compared to our terrain to say the least. This model seemed to make more peaks or variations in elevation than the terrain had.

Natural neighbors seemed like it modeled the terrain well at first glance, but then at further observation the model seemed to oversimplify the terrain in certain areas.

The TIN looked to be a good match of the elevation, however it does exaggerate parts of the terrain. If we had done a different sampling method, such as having more sample points at areas with more changes in elevation and less sample points in areas with little change in elevation.

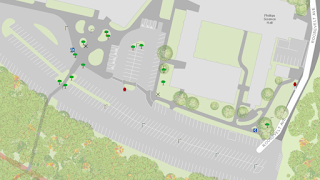

Spline (fig. 8) seemed to be the best fit for our data. This method is more detailed than the Kriging model and is also smoother than the IDW model. And although spline looked to work the best, it still did not represent the landscape well enough. The areas with the least accuracy were the ridge and the valley. These features could be a little better represented by taking more coordinate points in those areas.

|

| Fig. 8: Spline Interpolation for Resurveyed Terrain |

We then went back to recreate the terrain as best we could to match the terrain from the previous activity to improve the accuracy of our output model. While we were recreating the terrain we decided it would be a good idea to smooth out the surfaces since we agreed we would use the spline interpolation method for our final output. On top of this, we added more sample points so the terrain would be displayed in greater detail.

Conclusion:

After working on this lab for two weeks I have learned many ways to collect geospatial data and saw what works well with different interpolation methods. It was nice to be able to go back over this project and fix mistakes we noticed. I often times want to go back over labs and learn from previous mistakes, so it was nice to actually be able to do that with my teammates. It was extremely interesting to be able to relate a lab to the real world. I was able to see how a plan did not go as expected through the initial run and learned how to glean potential solutions from encountered issues. It is important not to have a single sampling technique. One should change and alter a sampling technique to best represent different terrains. For instance, it is better to take more sample points in areas with greater changes in elevation and to take fewer points in areas without much change in elevation. This saves both time and money in the real world. It was a breath of fresh air to have to think for ourselves on how to best increase the accuracy of the study area. We needed to learn to use our time wisely, be patient, work well with others, be flexible, and learn to troubleshoot. It is of utmost importance to plan ahead; this may seem cumbersome in the beginning but it saves so much time and headache in the end.