Introduction:

|

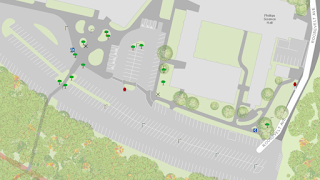

| Figure 1: Study area at the Priory in Eau Claire, WI |

This is a follow-up to a previous field activity where the class created navigation maps. The class was split into groups of two where each group member two navigation maps. The best map was then chosen and used for this activity. The best map was chosen by comparing the clutter within the map and observing the coordinate system used. The best map had minimal clutter and had a coordinate system of UTM. The UTM map was used opposed to the decimal degree map because it is far easier and more accurate to navigate using a linear unit such as feet or meters, rather than decimal degrees. The objective of this lab was to navigate through the Priory in Eau Claire, Wisconsin (Figure 1) by using the navigation map and an Etrex GPS unit (Figure 2).

| Figure 2: Etrex GPS unit |

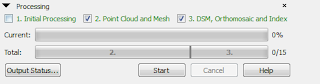

Methods:

|

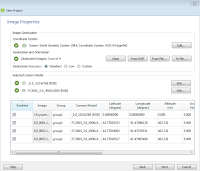

| Figure 3: Coordinates of marked locations |

Each group had 5 locations that were previously marked with pink ribbons that were labeled with a site number by Dr. Hupy (Figure 3). The GPS unit was used to help navigate through the study area as well as to log our path while navigating and to mark the locations of the course. As Dr. Hupy passed out the paper maps, it was hard not to notice some errors and mistakes on the parts of students. Many of the groups had compared maps and noticed they were in different coordinate systems. The group's map also had the latitude numbers cut off on the side of the map so they had to be filled in with the help of other groups' maps.

Next, the groups had to mark the five locations. This was done by looking at the coordinates given, as shown in figure 2. The points were a bit off on the map from their true location so the GPS unit helped to find the true locations in the field.

The final step was to go out into the field and navigate to the points by using the given coordinate systems, paper map, and GPS unit (Figure 4).

|

| Figure 4: The track log from the GPS unit while navigating the Priory |

Discussion:

I noticed it was difficult at times to navigate using the GPS. The group had backtracked a few times because it was hard to get bearings at times. It was difficult to navigate both the northing and easting while walking. The terrain varied greatly, from one area containing thick underbrush, to another area having easily accessible deer paths or a large mowed path, and one area was an open pine tree farm.